Signs on I-280 up the San Francisco peninsula proclaim it the “World's Most Beautiful Freeway.” It’s best when the fog rolls over the hills into the valley, as in this picture I took last summer.

That fog is not just pretty, it’s also the natural refrigerator responsible for California’s famously perfect weather. Clouds in the right place work wonders.

What is Fog?

This is a perfect analogy for the impending future of the Industrial Internet of Things (IIoT) computing. In weather, fog is the same thing as clouds, only close to the ground. In the IoT, fog is defined as cloud technology close to the things. Neither is a precise term, but it's true in both cases: clouds in the right place work wonders.

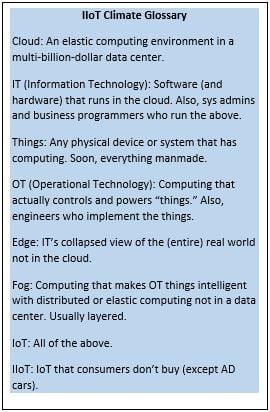

The major industry consortia, including the Industrial Internet Consortium (IIC) and the OpenFog Consortium, are working hard to better define this future. All agree that many aspects that drive the spectacular success of the cloud must extend beyond data centers. The also agree that the real world also contains challenges not handled by cloud systems. They also bandy about names and brand positioning; see the sidebar for a quick weather map. By any name, the fog, or layered edge computing, is critical to the operation of the industrial infrastructure.

Perhaps the best way to understand fog is to examine real use cases.

Example: Connected Medical Devices

Consider first the coming future of intelligent medical systems. The driving issue is an alarming fact: the 3rd leading cause of death in the US is hospital error. Despite extensive protocols that check and recheck assumptions, device alarms, training on alarm fatigue, and years of experience, the sad truth is that hundreds of thousands of people die every year because of miscommunications and errors. Increasingly clearly, compensating for human error in such a complex environment is not the solution. The best path is to use technology to take better care of patients.

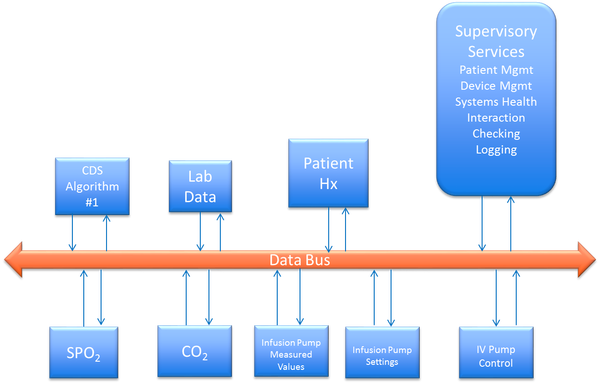

The Integrated Clinical Environment standard is a leading effort to create an intelligent, distributed system to monitor and care for patients. The key idea is to connect medical devices to each other and to an intelligent “supervisory” computing function. The supervisor acts like a tireless member of the care team, checking patient status and intelligently alerting human caretakers or even taking autonomous actions when there are problems.

- The supervisor combines and analyzes oximeter, capnometer, and respirator readings to reduce false alarms and stop drug infusion to prevent overdose. The DDS “databus” connects all the components with real-time reliable delivery.

This sounds simple. However, consider the real-world challenges. The problem is not just the intelligence. Current medical devices do not communicate at all. They have no idea that they are connected to the same patient. There’s no obvious way to ensure data consistency, staff monitoring, or reliable operation.

Worse, the above diagram is only one patient. That’s not the reality of a hospital; they have hundreds or thousands of beds. Patients move between rooms every day. The environment includes a mix of wired and wireless networks. Finding and delivering information within the treatment-critical environment is a superb challenge.

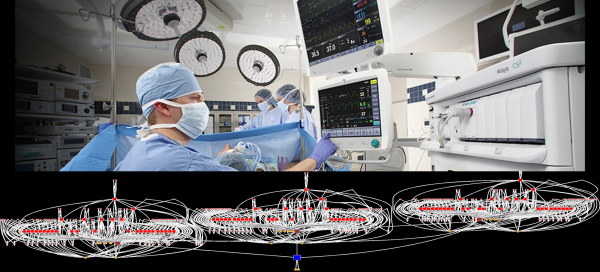

- A realistic hospital environment includes thousands of patients and hundreds of thousands of devices. Reliable monitoring technology must find the right patient and guarantee delivery of that patient’s data to the right analysis or staff. In the connectivity map above, every red dot is a “fog routing node”, responsible for passing the right data up to the next layer.

This scenario exposes the key need for a layered fog system. Complex systems like this must build from hierarchical subsystems. Each subsystem shares internal data, with possibly complex dataflow, to execute its functions. For instance, a ventilator is a complex device that controls gas flows, monitors patient state, and delivers assisted breathing. Internally, it includes many sensors and motors and processors that share this data. Externally, it presents a much simpler interface that conveys the patient’s physiological state. Each of the hundreds of types of devices in a hospital face a similar challenge. The fog computing system must exchange the right information up the chain at each level.

Note that this use case is not a good candidate for cloud-based technology. These machines must exchange fast, real-time data flows, such as signal waveforms, to properly make decisions. Also, patient health is at stake. Thus, each critical component will need a very reliable connection and even redundant implementation for failover. Those failovers must occur in a matter of seconds. It’s not safe or practical to rely on remote connections.

Example: Autonomous Cars

The “driverless car” is the most disruptive innovation in transportation since the “horseless carriage”. Autonomous Drive (AD) cars and trucks will change daily life and the economy in ways that hard to imagine. They will move people and things faster, safer, cheaper, farther, and easier than the primitive “bio-drive” cars of the last century. And the economic impact is stunning; 30% of all US jobs will end or change; trucking, delivery, traffic control, urban transport, child & elder care, roadside hotels, restaurants, insurance, auto body, law, real estate, and leisure will never again be the same.

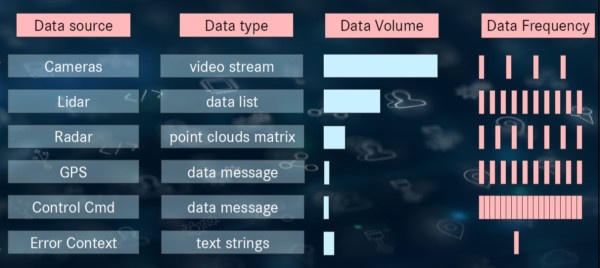

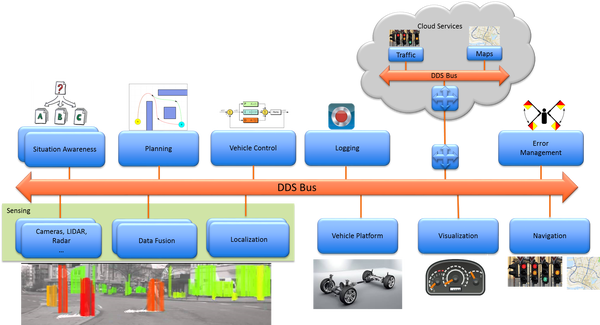

- Autonomous car software exchanges many data types and sources. Video and Lidar sensors are very high volume; feedback control signals are fast. Infrastructure that reliably sends exactly the right information to exactly the right places at the right time makes system development much easier. The vehicle thus combines the performance of embedded systems with the intelligence of the cloud…aka fog.

Intelligent vehicles are complex distributed systems. An autonomous car combines vision, radar, lidar, proximity sensors, GPS, mapping, navigation, planning, and control. These components must work together as a reliable, safe, secure system that can analyze complex environments in real time and react to negotiate chaotic environments. Autonomy is thus a supreme technical challenge. An autonomous car is more a robot on wheels than it is a car. Automotive vendors suddenly face a very new challenge. They need fog.

- Fog integrates all the components in an autonomous car design. Each of these components is a complex module on its own. As in the hospital patient monitoring case, this is only one car; fog routing nodes (red) are required to integrate subsystems and connect the car into a larger cloud-based system. This system also requires fast performance, extreme reliability, integration of many types of dataflow, and controlled module interactions. Note that cloud-based applications are also critical components. Fog systems must seamlessly merge with cloud-based applications as well.

How Can Fog Work?

So, how can this all work? I’ve hinted at a few of the requirements above. Connectivity is perhaps the greatest challenge. Enterprise-class technologies cannot deliver the performance, reliability, redundancy, and distributed scale that IIoT systems need.

The key insight is that systems are all about the data. The enabling technology is data-centricity.

A data-centric system has no hard-coded interactions between applications. When applied to fog connectivity, this concept overcomes problems associated with point-to-point system integration, such as lack of scalability, interoperability, and the ability to evolve the architecture. It enables plug-and-play simplicity, scalability, and exceptionally high performance.

The leading standard for data-centric connectivity is the Data Distribution Service (DDS). DDS is not like other middleware. It directly addresses real-time systems. It features extensive fine control of real-time Quality of Service (QoS) parameters, including reliability, bandwidth control, delivery deadlines, liveliness status, resource limits, and security. It explicitly manages the communications “data model”, or types and QoS used to communicate between endpoints. It is thus a “data-centric” technology.

DDS is all about the data: finding data, communicating data, ensuring fresh data, matching data needs, and controlling data. Like a database, which provides data-centric storage, DDS understands the contents of the information it manages. This data-centric nature, analogous to a database, justifies the term “databus”.

Databus vs. Database: The 6 Questions Every IIoT Developer Needs to Ask

.png?width=300&name=datacentricvsothers%20(1).png)

- Traditional communications architectures directly connect applications. This connection takes many forms, including messaging, remote object-oriented invocation, and service oriented architectures. Data-centric systems fundamentally differ because applications interact only with the data and properties of data. Data-centricity decouples applications and greatly enables scalability, interoperability and integration. Because many applications may interact with the data independently, data-centricity also makes redundancy natural.

Note that the databus replaces the application-application interaction with application-data-application interaction. This abstraction is the crux of data-centricity and it’s absolutely critical. Data-centricity decouples applications and greatly eases scaling, interoperability, and system integration.

Continuing the analogy above, a database implements this same trick for data-centric storage. It saves old information that you can later search by relating properties of the stored data. A databus implements data-centric interaction. It manages future information by letting you filter by properties of the incoming data. Data-centricity makes a database essential for large storage systems. Data-centricity makes a databus a fundamental technology for large software-system integration.

The databus automatically discovers and connects publishing and subscribing applications. No configuration changes are required to add a new smart machine to the network. The databus matches and enforces QoS. The databus insulates applications from the execution, or even existence, of other applications. As long as its data specifications are met, an application can run successfully.

A databus also requires no servers. It uses a protocol to discover possible connections. All dataflow is directly peer-to-peer for the lowest possible latency. And, with no servers to clog or fail, the fundamental infrastructure is both scalable and reliable.

To scale as in our examples above, we must combine hierarchical subsystems; that’s important to fog. This requires a component that isolates subsystem interfaces, a “fog routing node”. Note that this is a conceptual term. It does not have to be, and often is not, implemented as a hardware device. It is usually implemented as a service, or running application. That service can run anywhere needed: on the device itself, in a separate box, or in the higher-level system. Its function is to “wrap a box around” a subsystem, thus hiding the complexity. The subsystem thus exports only the needed data, allows only controlled access, and even presents a single security domain (certificate). Also, because the databus so naturally supports redundancy, the service design allows highly reliable systems to simply run many parallel routing nodes.

.png?width=600&name=fogroutingnode%20(1).png)

- Hierarchical systems require containment of subsystem internal data. The fog routing node maps data models between levels, controls information export, enables fast internal discovery, and maps security domains. The external interface is thus a much simpler view that hides the internal system.

RTI has immense experience with this design, with over 1000 projects. These include fast 3kHz feedback loops for robotics, NASA KSC’s huge 300k-point launch control SCADA system, Siemens Wind Power’s largest offshore turbine farms, the Grand Coulee dam, GE Healthcare’s CT imaging and patient monitoring product lines, almost all Navy ships of the US and its allies, Joy Global’s continuous mining machines, many pilotless drones and ground stations, Audi’s hardware-in-the-loop testing environment, and a growing list of autonomous car and truck designs.

The key benefits of a databus include:

- Reliability: Easy redundancy and no servers to fail allow extremely reliable operation. The DDS databus supports systems cannot tolerate being offline even for a short period, whether 5 minutes or 5 milliseconds.

- Real-time: Databus peer-to-peer delivery easily supports latencies measured in milliseconds and even tens of microseconds.

- Interface scale: Large software projects with more than 10 interacting modules must carefully define, coordinate, and evolve interfaces. Data-centric technology moves this responsibility from manual processes to automatic, enforced infrastructure. RTI has experience with systems with over 1500 teams of programmers building thousands of interacting applications.

- Data scale: When systems grow large, they must control dataflow. It’s simply not practical to send everything to every application. The databus allows filtering by content, rate, and more. Thus, applications receive only what they truly need. This greatly reduces both network and processor load. This is critical for any system with more than 1000 independently-addressable data items.

- Architecture: Data-centricity is not easily “added” to a system. It is instead adopted as the core design. Thus, the transformation makes sense only for next-generation IIoT designs. Most system designs have lifecycles of many years.

Any system that meets most of these requirements should seriously consider a data-centric design.

FREE eBook: Leading Applications & Architecture for the Industrial Internet of Things

The Foggy Future

Like the California fog blanket, a cloud in the right place works wonders. Databus technology enables elastic computing by bringing the data where it’s needed reliability. It supports real-time, reliable, scalable system building. Of course, communication is only one of the required functions of the evolving fog architecture. But it is key and relatively mature. It is thus driving many designs.

The Industrial IoT will change nearly every industry, including transportation, medical, power, oil and gas, agriculture, and more. It will be the primary driving trend in technology for the next several decades, the technology story of our lifetimes. Fog computing will move powerful processing currently only available in the cloud out to the field. The forecast is foggy indeed.

Posts by Tag

- Developers/Engineer (303)

- Connext DDS Suite (186)

- IIoT (125)

- Standards & Consortia (122)

- News & Events (121)

- Technology (74)

- Leadership (73)

- 2020 (54)

- Automotive (49)

- Aerospace & Defense (46)

- 2023 (35)

- Cybersecurity (33)

- Culture & Careers (31)

- Healthcare (31)

- 2022 (29)

- Connext DDS Tools (21)

- 2021 (19)

- Connext DDS Pro (19)

- Energy Systems (16)

- Military Avionics (15)

- FACE (13)

- Connext DDS Micro (12)

- JADC2 (10)

- ROS 2 (10)

- Transportation (9)

- 2024 (8)

- Connext DDS Cert (7)

- Databus (7)

- Connectivity Technology (6)

- Oil & Gas (5)

- Connext Conference (4)

- Connext DDS (4)

- RTI Labs (4)

- Case + Code (3)

- FACE Technical Standard (3)

- Research (3)

- Robotics (3)

- #A&D (2)

- Edge Computing (2)

- MDO (2)

- MS&T (2)

- Other Markets (2)

- TSN (2)

- ABMS (1)

- C4ISR (1)

- ISO 26262 (1)

- L3Harris (1)

- LabView (1)

- MathWorks (1)

- National Instruments (1)

- Simulation (1)

- Tech Talks (1)

- UAM (1)

- Videos (1)

- eVTOL (1)

Success-Plan Services

Success-Plan Services Stan Schneider

Stan Schneider